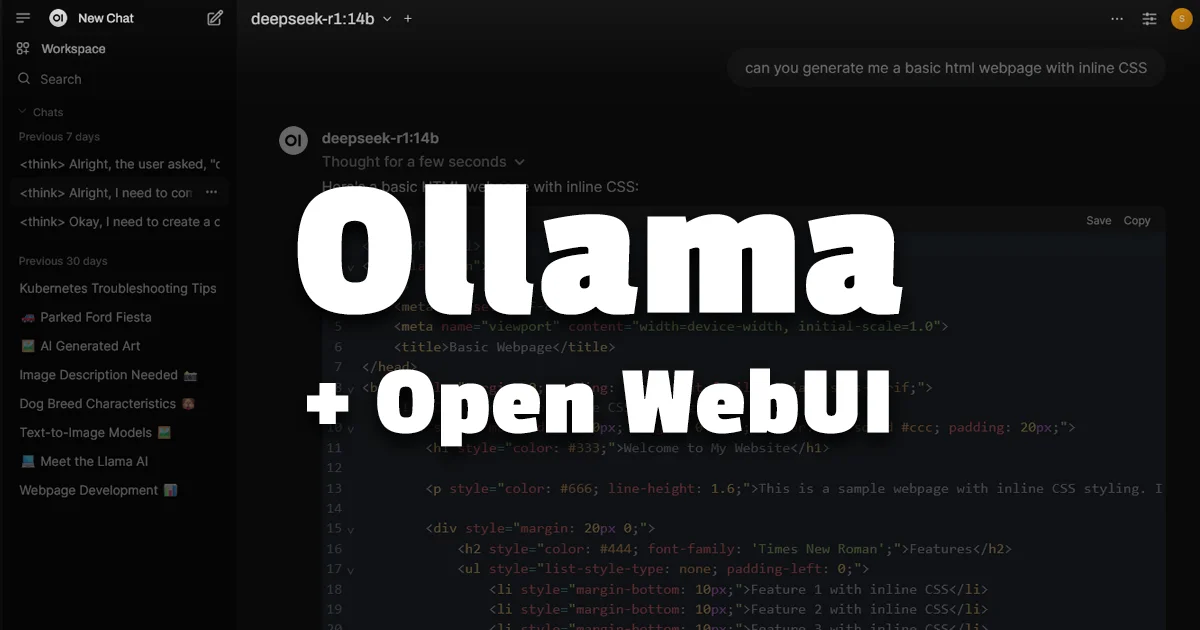

Ollama - Local Large Language Models (LLMs) + Open WebUI

https://hub.docker.com/r/ollama/ollama

Ollama is an open-source tool that runs large language models (LLMs) directly on a local machine. This makes it particularly appealing to AI developers, researchers, and businesses concerned with data control and privacy.

By running models locally, you maintain full data ownership and avoid the potential security risks associated with cloud storage. Offline AI tools like Ollama also help reduce latency and reliance on external servers, making them faster and more reliable.

I use Ollama to run various LLM’s, my current preferred LLM’s include llama, deepseek and llava.

Open WebUI

https://ghcr.io/open-webui/open-webui

Open WebUI is an extensible, self-hosted AI interface that adapts to your workflow, all while operating entirely offline.

This is simply a web interface to use with Ollama, OpenAI etc.